Building a Git Agent

Introduction

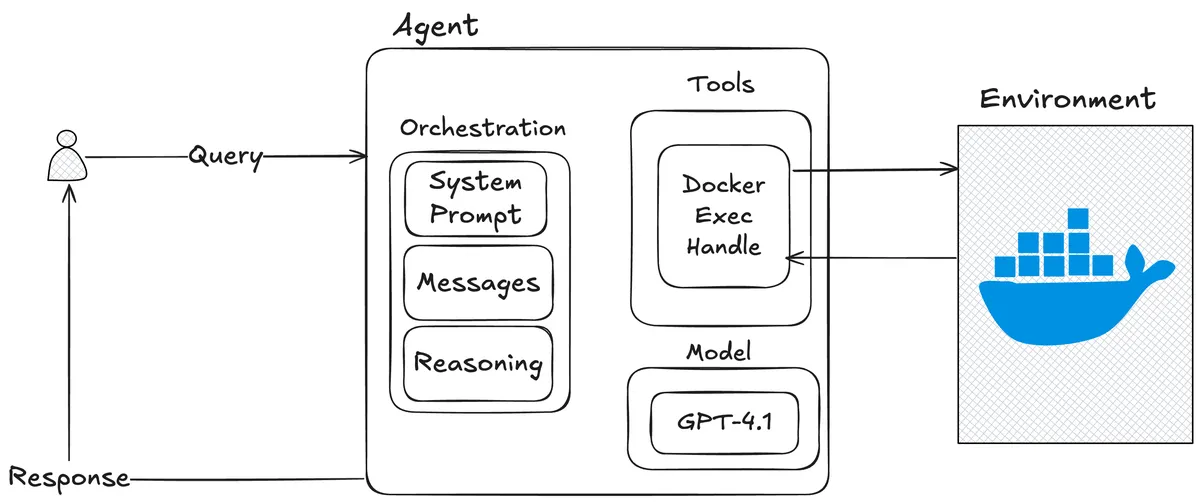

This blog post will explore building an agent that can fulfil local Git tasks on behalf of users, like creating a local Git repository and committing new files. The usefulness of a Git agent is questionable, and I wouldn't recommend using an agent to manage your Git operations, as hallucinations can have destructive effects, like force-pushing to a branch you and other devs are collaborating on, or pushing straight to main. The main goal of this post is to be an educational resource, showing how to build a minimal agent example, defining the environment, tools and the agent loop.

Architecture

Environment

The agent will interact with Git like a human would through a terminal (using the Git CLI and Unix commands). This means the agent needs a stateful environment to execute commands, which ideally is also isolated from the rest of your system. Anytime an AI is executing arbitrary code, it's essential to ensure system-level isolation, preventing unwanted side effects such as data leaks or destructive operations. The term for such an environment is a sandbox, and there are great solutions that exist like e2b or AWS Firecracker, but for our agent we can use a Docker container as a simpler alternative with weaker guarantees, since this isn't being deployed in production.

Tools

When thinking about tools for the agent, I think about what primitives are needed for the agent to complete it's goal (ability to call a database, call an API ect). The agent needs to be able to write commands to a container like a human would. We will implement this in the code section later.

Model

GPT-4.1 will be used as the model. It's a great off-the-shelf API model that generalizes well and provides strong baseline performance across most tasks. Other models, like Qwen3-32B, would also likely perform well, and I encourage you to try out different models to see how they compare (If cost is an issue, smaller models like GPT-4.1-mini and GPT-4.1-nano are great alternatives). As we will see later, what’s important is ensuring that the model has a rich enough context and strong tooling to complete the task.

Code

The full code is available here. The following sections will omit details for the sake of readability. For further details and the requirements for running the agent, refer to the README.md

Docker

There's a few requirements for the Docker container we are spinning up:

- The ability to execute Unix commands. We can use an Ubuntu image for this and set

tty = trueandstdin_open = true(setting these two arguments to true what allows the container to be interactive). - The container needs to be long running. We can use the

sleep infinitycommand to keep the container running until explicit termination, via aSIGTERMorSIGKILLfor example. - We need

gitinstalled. This can be done byexec'ing a command once the container is spun up.

The code snippet below shows the setup for container.

work_dir = "/git-agent"

# Spin up the container

container = client.containers.run(

image="ubuntu:latest",

command="sleep infinity",

detach=True,

tty=True,

stdin_open=True,

working_dir=work_dir

)

# Install git inside the container

exit_code, output = container.exec_run("bash -c 'apt-get update && apt-get install -y git'")

if exit_code != 0:

_, stderr = output

container.stop()

container.remove()

raise Exception(f"Failed to install git: {stderr.decode()}")

We are also setting up a working_dir that sets the supplied directory as the default directory (i.e. the pwd) when the agent executes commands. After running this, you can verify the container is running via docker ps.

Tools

We need a tool that can do the following:

- The ability to execute commands inside the container.

- Return the output of the command.

- Have a stateful handle to the container (i.e. if the agent calls

cd, the next tool call should execute the command in thecdtarget).

What we need is an exec handle to the container. The docker.py exec API container.exec(..) meets needs 1 and 2, but does not meet 3, each call is spawning a child process that is ephemeral. Thankfully, pexpect is a library we can use to get a stateful exec handle. We can spawn a persistent handle to the container and send commands to it.

# Spawn a persistent pexpect handle to the container. Emulating a user interacting with the shell

def spawn_handle():

child = pexpect.spawn(f"docker exec -it {container.id[:12]} bash", encoding='utf-8')

child.expect(r'[$#]')

return child

With the handle, we need to define a tool where the agent can send a command string to the handle and wait for a return string. The high-level flow and code of the tool is as follows:

- Parse the

cmd, we don't want the agent hallucinating and executing destructive operations likerm -rf. It's important to define guard rails within your tool definitions. - Send the

cmdwithhandle.sendline, capture the response withhandle.expect. - Capture the error if the the

sendlinefailed, e.g. if the container was killed. Error's from agent command, likecd'ing to a non-existent directory will be captured in 2.

def execute_command(cmd: str) -> str:

if not parse_command(cmd):

return f"Error: Forbidden command: {cmd}"

try:

handle.sendline(cmd)

handle.expect(r'[$#]', timeout=10)

....

return output

except Exception as e:

return f"Error executing command: {str(e)}"

System Prompt

As mentioned above, having well-defined (good pydocs!) and robust tools and rich context are key to your agent's ability to perform the task through in-context learning. I would recommend tuning the system prompt and testing your tools thoroughly in development.

To me, the system prompt defines the goal, tools, and invariants of the agent. And a lot of gain can come out of prompt engineering (I often use LLMs to help tune my prompt). Within the prompt, you should think about:

- Set the goal and identity of the agent. E.g. "You are a git agent who will ...".

- Set the environment and tools for the agent. E.g. "You have access to the following tools ... and you should call them in the following way".

- Break down the workflow for the agent, what core steps should the agent do?

- Provide few-shot examples of simple tasks within the workflow.

Refer to here for the full system prompt.

Pydantic

Enforcing strong typing (e.g. usage of literal) and schemas throughout your codebase, is important for code quality but also in handling the parsing from token-space to code-space. Pydantic is a powerful library that you can use to enforce schemas through your agent loop (Under the hood Pydantic will modify the system prompt). We will use this to add schemas for the agent's tool calls and turn response, making it easier to build the agent loop. The alternative would be writing brittle regex over model responses.

class ToolCall(BaseModel):

tool: str

args: str

class AgentResponse(BaseModel):

think: str

tool_call: ToolCall | None = None # Make tool_call optional

answer: str = "" # Default to empty string

Agent Loop

The agent loop is what drives the agent to it's goal. What this looks like a programmatic loop with inference, tool calls and exit conditions. The high-level flow of an agentic loop is:

- Seed the initial conversation with the system prompt and request for the agent.

- Make a request for inference, either to your model locally or an API model.

- Parse the model response, extracting tool calls.

- Call tools to interact with the environment, get the environment feedback and append to the conversation.

- Repeat until the model returns and answer or until the turns are exhausted.

And for our case this is no different. Below is the the agentic loop used for our agent:

# Seed the initial conversation

repo_name = "git-test-repo"

messages = [

{"role": "system", "content": system_prompt},

{"role": "user", "content": f"Create a new local repository called {repo_name}, create a new branch called 'test', create a new file called 'test.txt', and commit it with the message `hello from agent`"}

]

# Agent loop

turns = 0

while turns < 15:

response = oai_instructor.chat.completions.create(

...

)

# Check if agent provided a final answer (task complete)

if maybe_answer:

final_answer = maybe_answer

break

# Check if agent wants to make a tool call

if maybe_tool_call:

# Call the tool and append the tool result

...

messages.append({"role": "user", "content": f"<result>{tool_result}</result>"})

else:

print("Error: No tool call or answer found")

break

turns += 1

See the full loop here

Here is the output for the last 2 turns of an agent run:

=== Turn 13 ===

Thinking: Git user name and email are now configured locally. Next, I need to add 'test.txt' and commit it again with the correct message.

Tool call: execute_command

Args: git add test.txt && git commit -m 'hello from agent'

Tool result: git add test.txt && git commit -m 'he

ello from agent'

[test (root-commit) 22b03d8] hello from agent

1 file changed, 0 insertions(+), 0 deletions(-)

create mode 100644 test.txt

=== Turn 14 ===

Thinking: I've verified that the repository 'git-test-repo' was created, a 'test' branch was made and switched to, 'test.txt' was created, user identity was set, and the file was committed with the correct message. All steps have been completed and verified.

Final answer: Successfully created and initialized the git repository 'git-test-repo', created and switched to the 'test' branch, added a new file 'test.txt', and committed it with the message 'hello from agent'. All actions are complete and verified

Note that this code is not optimal and the agent might not succeed every time. I had to increase the turns to 15 from 10 as the agent would execute redundant commands like multiple ls calls.

Conclusion

This post has built a simple git agent with the aim of being an educational resource, capturing the core pieces of building a basic agent. This system is far from ideal, for example the durability of the conversations is an issue. If the agent experiences an unclean shutdown, it would need to resume from the most recent step as the environment is stateful. This would allow the agent to resume from checkpoints.

The durability of this program is a one of many considerations to increase this readiness of this program. For example, proper monitoring and observability, use of better practises (e.g. use of a more robust sandbox over docker), robust error handling (how would the agent handle losing a connection to the container?), and further testing and validation e.g. like OpenAI's model spec.